Real-world AI starts with time series.

Time series starts with InfluxDB.

Join the millions of developers building real-time systems with InfluxDB, the leading time series database.

Developers choose InfluxDB

More downloads, more open source users, and a stronger community than any other time series database in the world.

Try InfluxDB1B+

Downloads of InfluxDB

via Docker

1M+

Open source instances

live today

5B+

Downloads of InfluxData's

Telegraf

2,800+

Contributors

#1

Time series database

Source: DB Engines

Why InfluxDB

Manage high-volume, high-velocity data without sacrificing performance.

High-performance at scale

Manage millions of time series data points per second without limits or caps.

Run where

you need it

Run at any scale in any environment: in the cloud, on-prem, or at the edge.

Build with the

tools you love

Easily connect to your tech stack using data standards and 5K+ easy integrations.

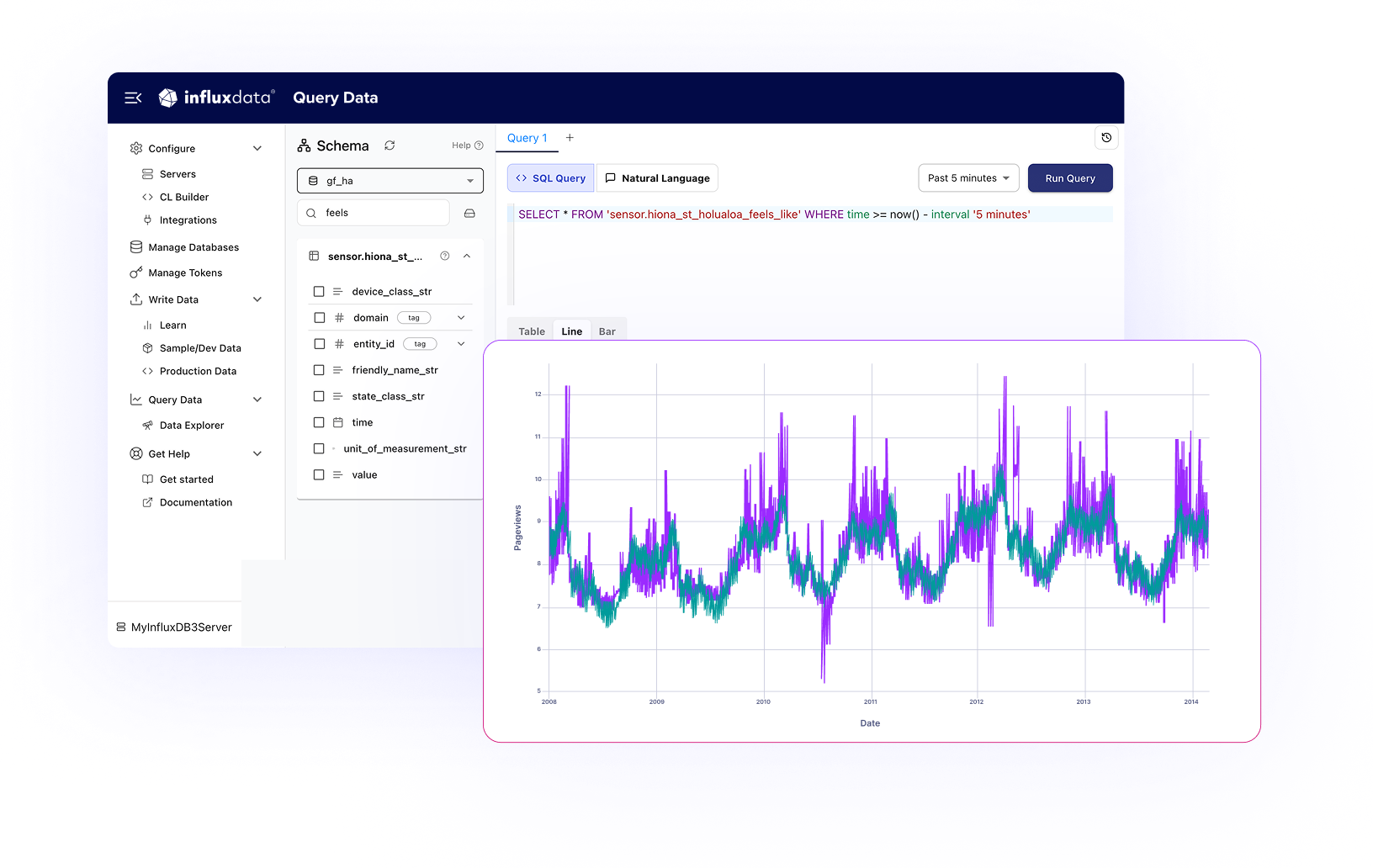

InfluxDB is the engine behind the real-time data pipeline.

Real-time AI requires massive streams of time series. This allows data to flow continuously through ingest, analysis, compression, and integration—fueling smarter, more autonomous systems.

See ways to get startedHigh-Speed Ingest

Ingest millions of series without impacting performance.

Real-Time Analytics

Transform and analyze unlimited series in real-time.

Eviction and Lakehouse Integration

Automatically evicts cold data and streams it into lakes, warehouses, and AI/ML pipelines.

Compression and Downsampling

Best-in-class compression. Parquet files store more data with less space.

Purpose-built for the world's most demanding systems

From grid stability to satellite telemetry, InfluxDB powers the systems that can't afford to lag.

Physical AI runs on time series

Predict the future based on the past.

InfluxDB captures high-resolution time series data with the precision and context AI models need to infer cause and effect, enabling real-time systems to detect, respond, and predict intelligently.

Learn More

Anomaly Detection

Find failure fast—detect anomalies in real-time, trigger automated responses, and adapt as conditions change.

Predictive Maintenance

Prevent downtime before it hits. Monitor equipment health and usage patterns to predict issues and resolve them before they start.

Autonomous Optimization

Build self-correcting systems. Enable models to self-learn and adapt through continuous retraining—automatically.

Build systems and infrastructure monitoring that scales

Keep pace with the flood of time series data. Learn how to build real-time monitoring that scales securely with InfluxDB and Grafana to keep ahead of outages, performance regressions, and costly blind spots.

Deploy anywhere

Whether you're building on-prem, in a private or multi-tenant cloud, or at the edge, InfluxDB meets developers where they are.

Open and extensible

Ingest from anywhere, deploy your way, and integrate with everything in your stack.

Explore integrations

Code in the languages you love

Read more in docs Python

Python

Javascript

Javascript

Go

Go

C#

C#

300+ Telegraf plugins

Integrate your favorite tools with Telegraf, our popular open source connector with 5B+ downloads.

Client libraries

InfluxDB client libraries make it easy to integrate time series data into your applications using your favorite programming languages.

Community and ecosystem

InfluxDB includes a massive community of cloud and open source developers to help you work the way you want.